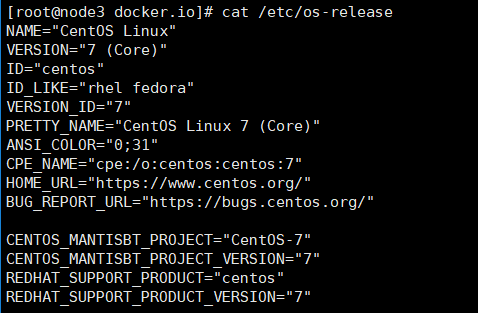

环境:

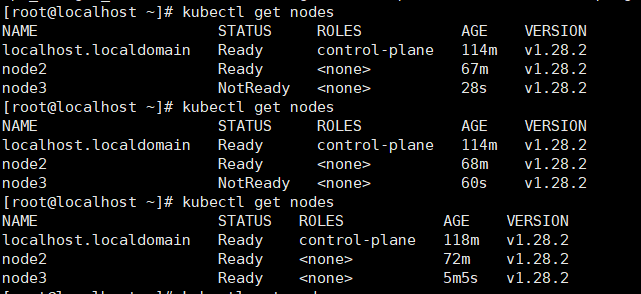

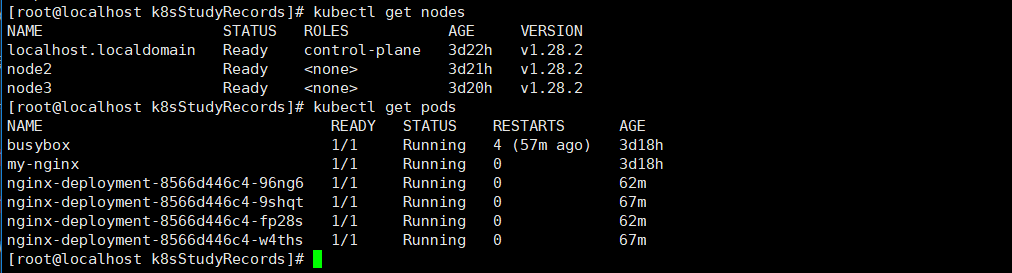

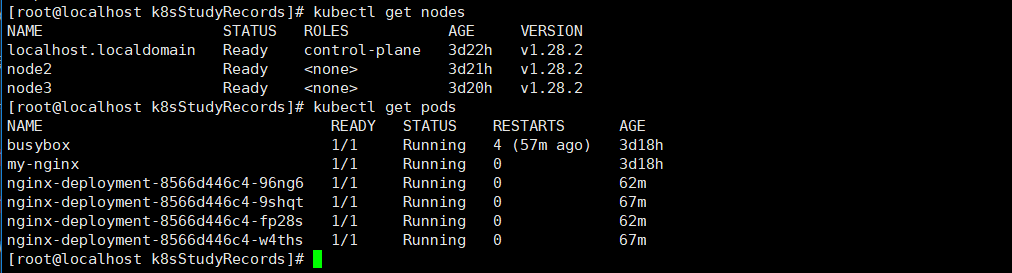

效果:

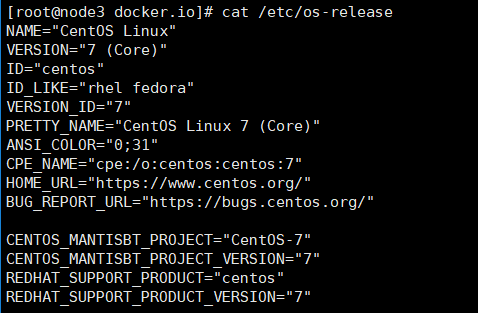

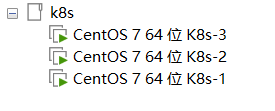

虚拟机环境:

Master节点:K8s-1 192.168.72.8

node2 K8s-2 192.168.72.9

node2 K8s-3 192.168.72.9

搭建过程(思路):

先完成一台基础主机:具备以下服务

- docker

- containerd

- kubelet kubeadm kubectl

编辑好containerd

- sandbox镜像源

- systemd 服务文件

克隆两份

- 配置Hostname

- 配置 IP

- 配置hosts

master配置

- 关闭 swap

- 加载内核参数

- kubeadm init

- 安装网络插件Flannel

nodes配置

- 关闭 swap

- 加载内核参数

- 加入集群

k8s配置过程现在看起来还是挺简单的。其实卡主的点大部分都是什么镜像源配置,但也不能怪国内环境,至少sandbox那个源还能用,其他的镜像可以通过离线导入倒也不是那么痛苦。主要就是那些初始化的时候要用的镜像,后续再看看这类镜像能不能离线导入,或者尝试直接离线安装二进制文件吧。

后续需要了解的点

- k8s init时的容器是否可以离线导入

- k8s 是否有离线安装的方式(RPA、tgz、二进制文件)

- containerd 镜像源加速

- containerd、docker 镜像源私有搭建

1. 基础主机搭建

- docker

- containerd

- kubelet kubeadm kubectl

1.1 Docker安装

参考docker容器技术 | Regen

1.2 containerd安装

Containerd 是一个行业标准的容器运行时,专注于简单性、健壮性和可移植性。它是 Docker 的核心组件之一,也可以独立使用。

包管理器安装

二进制包安装

1

2

3

4

5

6

7

8

9

10

11

|

wget https://github.com/containerd/containerd/releases/download/v<版本号>/containerd-<版本号>-linux-amd64.tar.gz

sudo tar Cxzvf /usr/local containerd-<版本号>-linux-amd64.tar.gz

sudo containerd config default > /etc/containerd/config.toml

sudo systemctl enable --now containerd

|

1.3 k8s基础组件安装

包管理器安装

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF

sudo yum install -y kubelet kubeadm kubectl

sudo systemctl enable --now kubelet

|

2. 编辑基础主机

1. systemd 服务文件

2. sandbox镜像源

3. 加载内核参数

4. 关闭swap

2.1 systemd 服务文件

主要解决containerd没有systemd单元的问题。如果有则不需要。通过systemctl status containerd检查

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| sudo tee /etc/systemd/system/containerd.service > /dev/null <<EOF

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target

[Service]

ExecStart=/usr/bin/containerd

Restart=always

RestartSec=5

Delegate=yes

KillMode=process

OOMScoreAdjust=-999

LimitNOFILE=1048576

LimitNPROC=infinity

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

EOF

|

然后重新加载 systemd:

1

2

| sudo systemctl daemon-reload

sudo systemctl enable --now containerd

|

查看状态

1

| sudo systemctl status containerd

|

2.2 sandbox镜像源

参考文章:搭建k8s集群初始化master节点 kubeadm init 遇到问题解决 - 杨海星 - 博客园

解决方式:修改containerd的镜像源文件

1

| vim /etc/containerd/config.toml

|

修改完重启服务systemctl daemon-reload && systemctl restart containerd

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

| disabled_plugins = []

imports = []

oom_score = 0

plugin_dir = ""

required_plugins = []

root = "/var/lib/containerd"

state = "/run/containerd"

temp = ""

version = 2

[cgroup]

path = ""

[debug]

address = ""

format = ""

gid = 0

level = ""

uid = 0

[grpc]

address = "/run/containerd/containerd.sock"

gid = 0

max_recv_message_size = 16777216

max_send_message_size = 16777216

tcp_address = ""

tcp_tls_ca = ""

tcp_tls_cert = ""

tcp_tls_key = ""

uid = 0

[metrics]

address = ""

grpc_histogram = false

[plugins]

[plugins."io.containerd.gc.v1.scheduler"]

deletion_threshold = 0

mutation_threshold = 100

pause_threshold = 0.02

schedule_delay = "0s"

startup_delay = "100ms"

[plugins."io.containerd.grpc.v1.cri"]

device_ownership_from_security_context = false

disable_apparmor = false

disable_cgroup = false

disable_hugetlb_controller = true

disable_proc_mount = false

disable_tcp_service = true

enable_selinux = false

enable_tls_streaming = false

enable_unprivileged_icmp = false

enable_unprivileged_ports = false

ignore_image_defined_volumes = false

max_concurrent_downloads = 3

max_container_log_line_size = 16384

netns_mounts_under_state_dir = false

restrict_oom_score_adj = false

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

selinux_category_range = 1024

stats_collect_period = 10

stream_idle_timeout = "4h0m0s"

stream_server_address = "127.0.0.1"

stream_server_port = "0"

systemd_cgroup = false

tolerate_missing_hugetlb_controller = true

unset_seccomp_profile = ""

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/opt/cni/bin"

conf_dir = "/etc/cni/net.d"

conf_template = ""

ip_pref = ""

max_conf_num = 1

[plugins."io.containerd.grpc.v1.cri".containerd]

default_runtime_name = "runc"

disable_snapshot_annotations = true

discard_unpacked_layers = false

ignore_rdt_not_enabled_errors = false

no_pivot = false

snapshotter = "overlayfs"

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

BinaryName = ""

CriuImagePath = ""

CriuPath = ""

CriuWorkPath = ""

IoGid = 0

IoUid = 0

NoNewKeyring = false

NoPivotRoot = false

Root = ""

ShimCgroup = ""

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options]

[plugins."io.containerd.grpc.v1.cri".image_decryption]

key_model = "node"

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = ""

[plugins."io.containerd.grpc.v1.cri".registry.auths]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.headers]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

tls_cert_file = ""

tls_key_file = ""

[plugins."io.containerd.internal.v1.opt"]

path = "/opt/containerd"

[plugins."io.containerd.internal.v1.restart"]

interval = "10s"

[plugins."io.containerd.internal.v1.tracing"]

sampling_ratio = 1.0

service_name = "containerd"

[plugins."io.containerd.metadata.v1.bolt"]

content_sharing_policy = "shared"

[plugins."io.containerd.monitor.v1.cgroups"]

no_prometheus = false

[plugins."io.containerd.runtime.v1.linux"]

no_shim = false

runtime = "runc"

runtime_root = ""

shim = "containerd-shim"

shim_debug = false

[plugins."io.containerd.runtime.v2.task"]

platforms = ["linux/amd64"]

sched_core = false

[plugins."io.containerd.service.v1.diff-service"]

default = ["walking"]

[plugins."io.containerd.service.v1.tasks-service"]

rdt_config_file = ""

[plugins."io.containerd.snapshotter.v1.aufs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.btrfs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.devmapper"]

async_remove = false

base_image_size = ""

discard_blocks = false

fs_options = ""

fs_type = ""

pool_name = ""

root_path = ""

[plugins."io.containerd.snapshotter.v1.native"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.overlayfs"]

root_path = ""

upperdir_label = false

[plugins."io.containerd.snapshotter.v1.zfs"]

root_path = ""

[plugins."io.containerd.tracing.processor.v1.otlp"]

endpoint = ""

insecure = false

protocol = ""

[proxy_plugins]

[stream_processors]

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar"]

accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar"

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"]

accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar+gzip"

[timeouts]

"io.containerd.timeout.bolt.open" = "0s"

"io.containerd.timeout.shim.cleanup" = "5s"

"io.containerd.timeout.shim.load" = "5s"

"io.containerd.timeout.shim.shutdown" = "3s"

"io.containerd.timeout.task.state" = "2s"

[ttrpc]

address = ""

gid = 0

uid = 0

|

2.3 加载内核参数

加载的内核参数主要和网络有关系,如果没配置好的话后续加入master节点以及起kube-flannean会出问题。

1

| sudo modprobe br_netfilter

|

1

2

3

4

5

| cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

|

检查

1

2

3

| lsmod | grep br_netfilter

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

sysctl -p

|

2.4 关闭swap

1

2

3

| swapoff -a

sudo sed -i '/ swap / s/^/#/' /etc/fstab

|

2.5 修改Hostname(可选)

可选,这台主机最终是作为master节点,可以自己随便改个名字。

1

| hostnamectl set-hostname mastername

|

如果修改了主机名一定要增加本地域名解析

没改成功可以去/etc/hostname改

3. 克隆出两个节点

- 配置Hostname

- 配置 IP

- 配置hosts

克隆机器后进行

3.1 配置Hostname

克隆出来的主机作为普通的节点,必须修改名字,否则无法接入集群

1

| hostnamectl set-hostname node2

|

一定要增加本地域名解析

没改成功可以去/etc/hostname改

3.1 配置 IP

根据上网的网卡改

可参考Linux网络配置 | Regen

1

| vim /etc/sysconfig/network-scripts/ifcfg-ens33

|

3.1 配置hosts

1

2

3

| vim /etc/hosts

# add

127.0.0.1 node2 # 对应的hostanme

|

4. master配置

- kubeadm init

- 安装网络插件Flannel

4.1 kubeadm init

1

2

3

4

| kubeadm init \

--apiserver-advertise-address=192.168.72.8 \

--pod-network-cidr=10.244.0.0/16 \

--image-repository=registry.aliyuncs.com/google_containers

|

此时会输出介入集群的命令:

1

| kubeadm join 192.168.72.8:6443 --token gisx9m.q8kqbw0zndrm8i5b --discovery-token-ca-cert-hash

|

如果忘记可以重新生成:

在 master 节点 上执行:

1

| kubeadm token create --print-join-command

|

4.1 安装网络插件Flannel

1

| kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

|

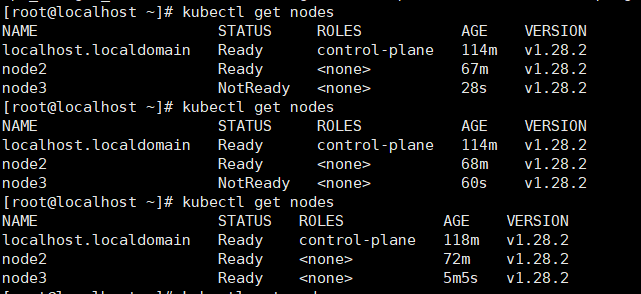

5. nodes配置

- 加入集群

1

| kubeadm join 192.168.72.8:6443 --token gisx9m.q8kqbw0zndrm8i5b --discovery-token-ca-cert-hash

|

等待kube-flannel起来即可,过程可能有点久取决于网速和配置。

6. 问题总结

以上流程已经避免了很多坑,以下是几个容易卡主的问题。

解决contairnerd镜像源问题

可参考:

搭建k8s集群初始化master节点 kubeadm init 遇到问题解决 - 杨海星 - 博客园

解决kube-flannean安装的问题

可参考:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml